Refreshed

Wednesday, March 30, 2022, at 07:08AM

By Eric Richardson

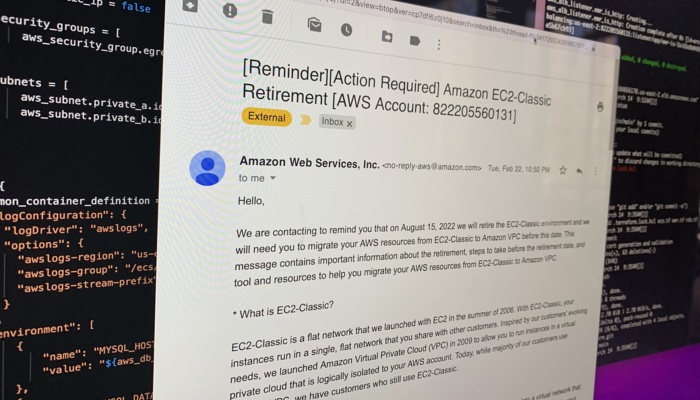

For months I've been getting emails from Amazon saying that my ancient EC2 instance type was set to be retired.

As mentioned in the last post, the bones of this site are a little creaky. It's running on Rails 3.2.15, which came out in 2013, stuck on Ruby 2.1.5, which came out in 2014. It's spent the last eight years running on an m1.small instance in AWS, provisioned using Chef cookbooks that were neat when I wrote them in 2013, but haven't had a thing done to them since.

I didn't even have a good way to deploy an update to change the sidebar to show that I've spent the last six years working for Square and Cash App rather than public radio.

Definitely time for a refresh. I'd been messing around with using a Docker container to get my ancient Ruby running on this M1 iMac, so I decided to shoot for ECS (Elastic Container Service), using Amazon-provisioned database and Redis.

Fair note here: All of this is overkill for this blog, which should really be hosted as a static site somewhere. We'll cross that bridge later. I figured getting it running correctly was step one to doing any other changes. Also, as you can see in the screen above, Amazon is getting set to retire the setup my old instance was running in and therefore putting a cap on how long I can be lazy.

I was pretty lost on exactly where the industry had moved here going in. I knew I could get the app running in a Docker container and that would take care of a lot of my environment issues, but I've spend the last six years just deploying apps onto the tooling that platform teams give me, not trying to keep up with developments in AWS.

I knew ECS was a way to run containers, but also saw mentions of Fargate and didn't understand the difference. TLDR: Fargate is just a way to deploy services into ECS without having to know about the EC2 instance underneath.

I struggled for a while to just get a handle on which AWS pieces I was going to need. This blog is a Rails app with a MySQL database and a Redis instance for caching. Traditionally it used a local filesystem to store image assets.

There are lots of "how to deploy a Rails app in ECS" blog posts, but this series of posts probably helped me walk through the concepts the best.

I needed to:

1) Build my image 2) Get it to a container registry 3) Have a database 4) Build out an ECS cluster with a task using my container 5) Have a load balancer point to the containers 6) Lots of other stuff

On the application side, I needed to get off that local storage and get assets hosted on S3 instead. Conveniently, I had apparently started that effort years ago and had an S3 bucket with a bunch of image files in it. Inconveniently, I had left it half-done and without any real notes on what was or wasn't working. Images on this site use an engine called AssetHost, which I wrote at SCPR and released as open source on Github. In my second stint at SCPR I had ported AssetHost to use S3 for a backend (we actually used a locally-hosted S3-compatible interface through Riak CS, but that's a different story), but hadn't ever fully merged that back to the main repo.

In the end, it's debatable whether any of this pre-work was actually helpful: it left me thinking these changes were closer to complete than they were, which lured me away from just making the smallest possible updates to get things running.

I couldn't ever figure out how to get Ruby 2.1.5 to build on M1 and/or modern OSX, but I used Docker Compose to get the app running in a container by taking Ubuntu 14.4 and compiling Ruby 2.1.5 inside of it. I built my own, but my Dockerfile for the ruby container looked very similar to this one.

My eventual Dockerfile for the app looked like this:

FROM ewr/ruby-2.1.5:0.0.1

RUN mkdir -p /app

RUN mkdir -p /rails

WORKDIR /app

COPY Gemfile Gemfile.lock ./

RUN bundle install --jobs 20 --retry 5

RUN mkdir -p /puma/pids

RUN mkdir -p /puma/sockets

COPY . ./

RUN RAILS_ENV=production DATABASE_URL=nulldb://user:pass@127.0.0.1/dbname bundle exec rake assets:precompile

EXPOSE 3000

CMD ["bundle", "exec", "puma", "-C", "config/docker_puma.rb", "-p", "3000"]

Note on the asset compilation: In Rails 3 you could just add config.assets.initialize_on_precompile = false to not load the Rails environment when compiling. That didn't work for me, because a piece of the AssetHost javascript wants to know where the AssetHost engine is mounted to hardcode some paths. I needed the app to start up, but without any DB at this stage in the build. nulldb let me get there. Later I think I refactored away the need to initialize the app entirely, but I haven't revisited the step yet.

Eventually I got the app running in Docker, using s3 for asset storage, and everything was looking up.

Then I had to figure out the AWS stuff.

I made a first pass in February just clicking buttons in the AWS console, following the blog posts I linked above. They helped me understand some pieces, but I didn't get anything working.

This month, in the effort that finally stuck, I started from this Fargate / Terraform tutorial and actually got somewhere.

I've stuck my terraform code up on Github. I extended the tutorial to add RDS, Redis, a worker task, and some Route 53 bits around domain validation for the SSL certificate.

So what's not done yet?

I have no clue how health checks work, and am probably doing wrong things there.

I still have to manually build images and trigger a deploy. I need to hook into something from Github.

There's no reason a worker should be hanging around all the time for the once every few weeks I upload an image here... That worker should be a lambda, and my Resque queue should just be SQS.

I should stick Cloudflare or something in front of the images, rather than serving them directly.

And, as mentioned before, this should all just be statically generated.

But still... Progress, right?